Abstract

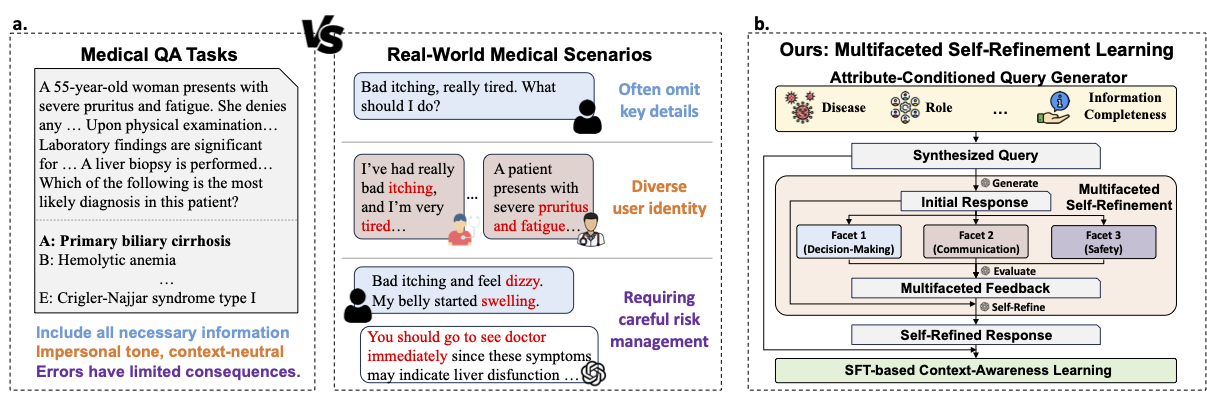

Large language models (LLMs) have shown great promise in the medical domain, achieving strong performance on several benchmarks. However, they continue to underperform in real-world medical scenarios, which often demand stronger context-awareness, i.e., the ability to recognize missing or critical details (e.g., user identity, medical history, risk factors) and provide safe, helpful, and contextually appropriate responses. To address this issue, we propose Multifaceted Self-Refinement (MuSeR), a data-driven approach that enhances LLMs' context-awareness along three key facets (decision-making, communication, and safety) through self-evaluation and refinement. Specifically, we first design an attribute-conditioned query generator that simulates diverse real-world user contexts by varying attributes such as role, geographic region, intent, and degree of information ambiguity. An LLM then responds to these queries, self-evaluates its answers along three key facets, and refines its responses to better align with the requirements of each facet. Finally, the queries and refined responses are used for supervised fine-tuning to reinforce the model's context-awareness ability. Evaluation results on the latest HealthBench dataset demonstrate that our method significantly improves LLM performance across multiple aspects, with particularly notable gains in the context-awareness axis. Furthermore, by incorporating knowledge distillation with the proposed method, the performance of a smaller backbone LLM (e.g., Qwen3-32B) surpasses its teacher model (GPT-oss-120B), achieving a new SOTA across all open-source LLMs on HealthBench (63.8%) and its hard subset (43.1%).

Method

To address the limitations of current medical LLMs in real-world scenarios, we introduce MuSeR (Multifaceted Self-Refinement), a novel approach that enhances LLMs' medical context-awareness by synthesizing simulated real-world medical queries and generating context-aware responses by self-refining the answers of LLMs along diverse facets of context-awareness. Specifically, MuSeR features two core components:

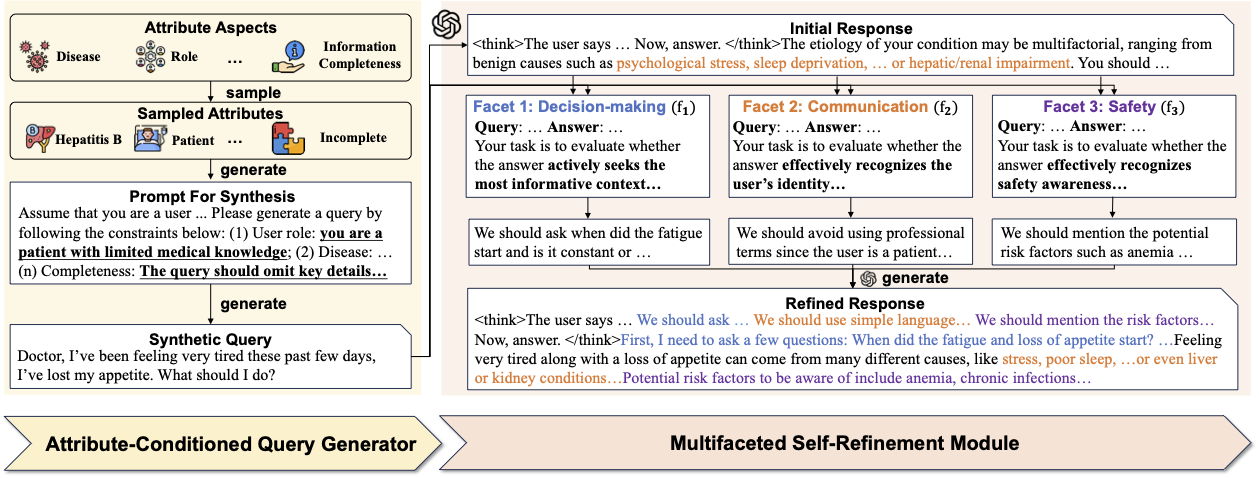

1. Attribute-Conditioned Query Generation

We introduce a query generator to simulate the complexity of real-world query distribution. Specifically, we assume that the real-world query is controlled by a set of attributes (e.g., user role, intent, geographic location). Built on that, the proposed attribute-conditioned query generator first samples a set of attributes from a prior distribution, and then generates a query conditioned on the sampled attributes using an LLM. In our framework, we consider a total of seven key attributes for query generation, including (1) user identity, (2) geographic region, (3) the specific disease or injury concerned, (4) user intent, (5) the vagueness of the query, (6) the completeness of the provided details, and (7) the language style.

In our implementation, we generate 100k high-quality queries with the proposed attribute-conditioned query generator using DeepSeek-V3. We observe that the generated queries exhibit a high degree of diversity in terms of both linguistic style and medical topics, closely resembling real-world medical queries.

2. Multifaceted Self-Refinement

Based on the synthetic queries, we further devise a multifaceted self-refinement module where an LLM responds to the generated queries, evaluates its answers along the three key facets, and refines its responses to better align with the requirements of each facet. We primarily consider three key facets of context-awareness that are crucial for providing safe, helpful, and appropriate responses in the medical domain:

- Decision-Making Awareness: This facet focuses on identifying critical information (e.g., medical history, medication, examination results) essential for accurate medical decision-making, as well as actively seeking missing details from users when necessary. Such awareness is critical for ensuring the accuracy and practical utility of medical advice.

- Communication Awareness: This facet involves recognizing the user's identity (e.g., patient, doctor) and response preferences, and tailoring both terminology (e.g., layman vs. professional) and level of detail (e.g., brief vs. comprehensive) accordingly. This facet is essential for providing responses that match the user's knowledge background and expectations.

- Safety Awareness: This facet requires the model to recognize potential risk factors (e.g., symptom severity, underlying conditions) and ethical considerations (e.g., the use of unproven drugs) in its responses. Such awareness is vital for ensuring both the safety and ethical integrity of the medical advice provided.

For each generated query, the target LLM first generates an initial response. Subsequently, the LLM self-evaluates the answer along each facet above and generates a supplementary rationale to explain how the answer can be improved to better align with the requirements of the facet. For example, for the decision-making awareness facet, the model may identify missing critical information in the query and generate a rationale such as "We should ask about the patient's current medications to make an accurate diagnosis". Finally, the refined answer is generated by prompting the LLM to directly refine the initial answer based on the query and the generated rationales.

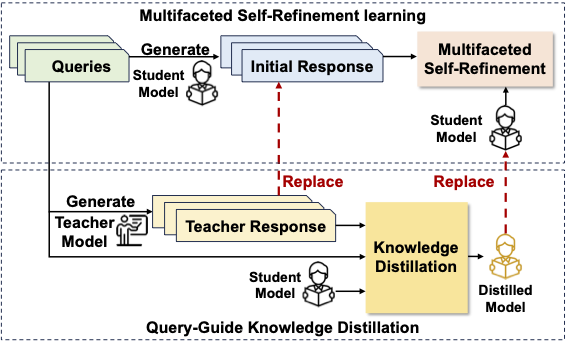

Training Strategy

We propose a two-stage supervised finetuning to improve LLMs' medical context awareness:

- Query-Guided Knowledge Distillation: This training phase aims to inject essential medical knowledge and reasoning abilities into the target LLM to support context-aware responses. Specifically, a strong teacher LLM first generates high-quality responses for the synthesized queries, and the target LLM is then fine-tuned to align its outputs with those of the teacher before proceeding to the multifaceted self-refinement stage. We observe that this stage not only enhances the medical knowledge and reasoning capabilities of the student model but also improves the effectiveness of the subsequent self-refinement process.

- Multifaceted Self-Refinement: Following knowledge distillation, the distilled LLM is further employed to generate multifaceted, self-refined responses. The synthesized queries along with the refined answers are then used for supervised fine-tuning of the distilled model, thereby improving its medical context-awareness and response quality.

Experimental Results

We evaluate MuSeR on HealthBench, a recent medical benchmark introduced by OpenAI that comprises 5,000 realistic health-related conversations annotated by 262 physicians from 60 countries. HealthBench is designed to assess the performance of LLMs as medical assistants in real-world scenarios.

Overview

Experimental results demonstrate that the proposed MuSeR framework significantly enhances the performance of LLMs across different model families and sizes. Notably, when applied to Qwen3-32B, MuSeR enables the model to surpass the previous SOTA model Baichuan-M2-32B by 3.7% on the full HealthBench dataset and 8.4% on the hard subset, achieving a new state-of-the-art among all open-source LLMs.

HealthBench Full

HealthBench Hard

Detailed Performance

MuSeR achieves significant performance improvement on 4 out of 5 axes compared to the backbone model, especially on the context-awareness axis (+19.4%). Regarding the themes, MuSeR achieves improvements on all themes and achieves the best performance on 6 out of 7 themes among all compared models, demonstrating the effectiveness of the proposed method across diverse medical scenarios. Notably, Qwen3-32B+MuSeR achieves particularly large improvements compared to the previous SOTA Baichuan-M2-32B on the context seeking (+7.6%), global health (+5.0%), and hedging (responding under uncertainty) (+4.0%) themes, which require strong context-awareness ability to seek missing information, consider the user's background (availability of medical resources in the specific region), and provide cautious advice under uncertainty, respectively.

Performance by Axes

Performance by Themes

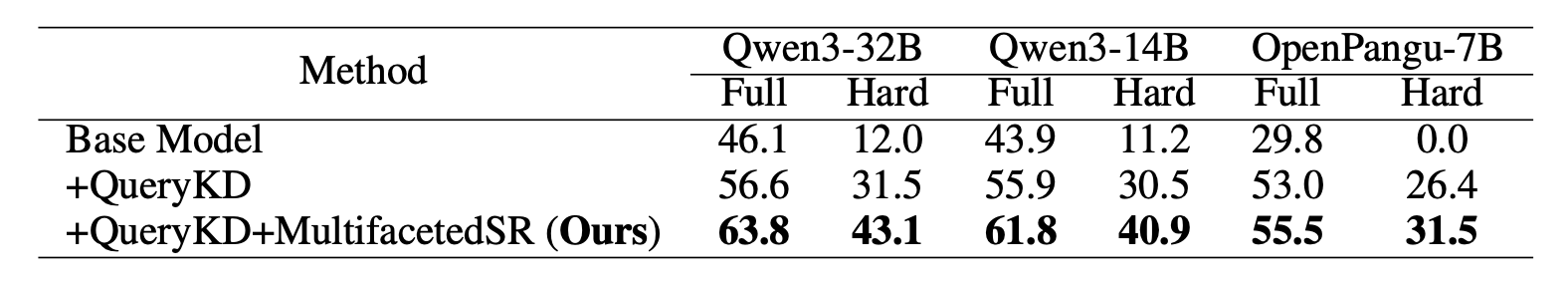

Ablation Study

Experimental results demonstrate that both training stages contribute significantly to the overall performance improvement of the backbone LLMs on the HealthBench dataset and its hard subset. Specifically, the query-guided knowledge distillation (QueryKD) stage brings substantial performance gains (+10.5%, +12.0%, +23.2% for Qwen3-32B, Qwen3-14B, and OpenPangu-7B, respectively), indicating the effectiveness of the synthetic queries in transferring medical knowledge and reasoning skills from the teacher model to the student model. Furthermore, the multifaceted self-refinement stage further enhances the performance of the student model (+7.2%, +5.9%, +2.5% for Qwen3-32B, Qwen3-14B, and OpenPangu-7B, respectively), especially on the hard subset (+11.6%, +10.4%, +5.1% for Qwen3-32B, Qwen3-14B, and OpenPangu-7B, respectively), validating the effectiveness of the proposed multifaceted self-refinement learning framework in enhancing the context-awareness ability of LLMs in the medical domain.

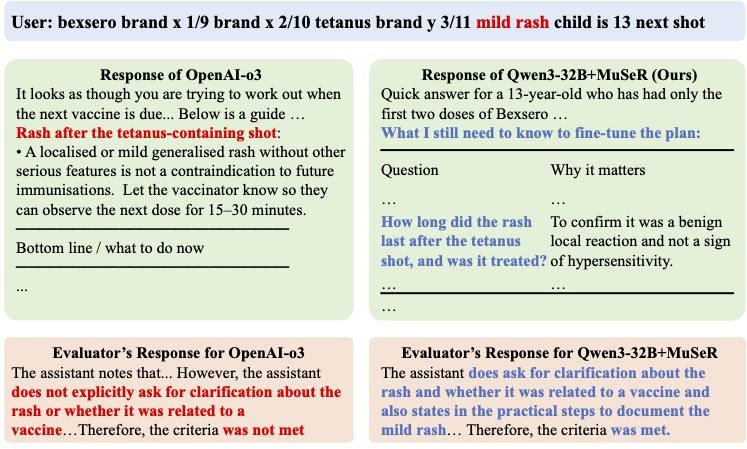

Case Study

We further conduct a case study to qualitatively compare the responses generated by o3 and our proposed method (Qwen3-32B+MuSeR). We observe that the response generated by o3 assume that the rash is caused by the vaccination, which may lead to unsafe advice. In contrast, the response generated by our proposed method actively asks for the duration of the rash with proper reason ("Why it matters"), resulting in a more context-aware and safer response.

Citation

@article{muser2025,

title={Enhancing the Medical Context-Awareness Ability of LLMs via Multifaceted Self-Refinement Learning},

author={Anonymous Authors},

journal={Under Review},

year={2025}

}